Contents

- Loss Functions (SVM, softmax)

- Regularization

- Optimization (Random Search, Gradient descent)

After Classifying, we need to ...

- Define a loss function that quantifies our unhappiness with the scores across the training data.

- Come up with a way of efficiently finding the parameters that minimize the loss function. (optimization)

Loss Function

Given a dataset of examples $\{(x_i, y_i)\}^N_{i=1}$ where $x_i$ is image and $y_i$ is label,

Loss over the dataset is a sum of loss over examples:

$L = \frac{1}{N}\sum_i{L_i(f(x_i,W), y_i)}$

(multiclass) Hinge loss:

Given an example ($x_i$, $y_i$) where $x_i$ is the image and where $y_i$ is the label,

and using the shorthand for the scores vector: s = f($x_i$, W)

Hinge loss is

$L_i = \sum_{j\neq y_i}max(0, s_j - s_{y_i}+1)$

+) 1 is the value for the margin, bringing it closer to ground truth and widening the gap with other labels.

The value 1 is somewhat aribtrary.

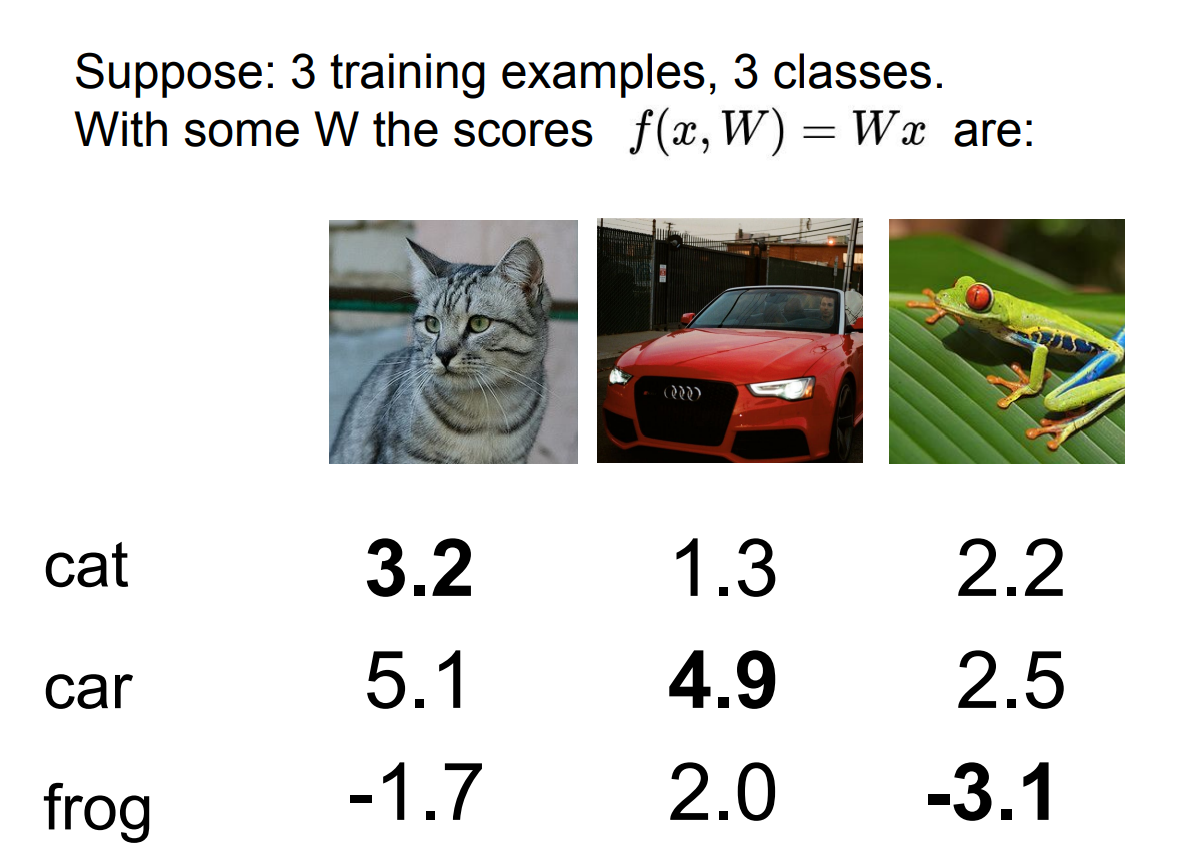

Example

The SVM loss of cat

= the loss from the car + the loss from the frog

= max(0, 5.1-3.2+1) + max(0, -1.7-3.2+1)

= max(0, 2.9) + max(0, -3.9)

= 2.9 + 0

= 2.9

The SVM loss of car

= the loss from the cat + the loss from the frog

= max(0, 1.3-4.9+1) + max(0, 2.0-4.9+1)

= max(0, -2.6) + max(0, -1.9)

= 0 + 0

= 0

The SVM loss of frog

= the loss from the cat + the loss from the car

= max(0, 2.2-(-3,1)+1) + max(0, 2.5-(-3,1)+1)

= max(0, 6.3) + max(0, 6.6)

= 6.3 +6.6

= 12.9

The average SVM loss over full dataset

= $\frac{1}{3}(2.9+0+12.9)$

= 5.27

Questions of SVM

Q1: What happens to loss if car scores change a bit?

No change in loss of the car

Q2: What is the min/max possible loss?

0 ~ infinity

Q3: At initialization W is small so small approximates to 0. What is the loss?

# of classes - 1

Q4: What if the sum was over all classes? (including j = y_i)

The loss increases by 1.

Q5: What if we used mean instead of sum?

No change

Q6: What if we used the squared term? (L_i = $\sum_{j\neq y_i}max(0, s_j - s_{y_i}+1)^2$)

Change. Different classfication algorithm

Code for SVM loss

def L_i_vercotrized(x, y, W):

scores = W.dot(x)

margins = np.maximum(0, scores - scores[y] + 1)

margins[y] = 0

loss_i = np.sum(margins)

return loss_i

Suppose that we found a W such that L = 0. Is this W unique? No! 2W also has L = 0!

Regularization

Among competing hypotheses,

the simplest is the best.

- William of Ockham

- Data loss: Model predictions should match training data. $\frac{1}{N}\sum_{i=1}^NL_i(f(x_i, W), y_i)$

- Regularization: Model should be simple, so it works on test data. $\lambda R(W)$

Therefore, the loss function is

$L(W)=\frac{1}{N}\sum_{i=1}^NL_i(f(x_i, W), y_i)+\lambda R(W)$

The type of Regularization

+++ L2 Regularization 관해서 한번 더 듣기

Softmax Classifier

Scores = unnormalized log probabilities of the classes

$P(Y=k|X=x_i)= \frac{e^{s_k}}{\sum_je^{s_j}}\ \textup{(softmax function)} \ where \ s=f(x_i;W)$

Want to maximize the log likelihood or to minimize the negative log likelihood of the correct class:

$L_i = -logP(Y=y_i|X=x_i)$

in summary,

$Li = - log(\frac{e^{s_k}}{\sum_je^{s_j}})$

Example

1. unnormalized log probabilities

2. convert log probabilites to probablities with exponential function

| unnormalized log prob. | unnormalized prob. | prob. | |

| cat | 3.2 | e^{3.2} = 24.5 | |

| car | 5.1 | e^{5.1}=164.0 | |

| frog | -1.7 | e^{-1.7}=0.18 |

3. normalized probabilites

| unnormalized log prob. | unnormalized prob. | prob. | |

| cat | 3.2 | e^{3.2} = 24.5 | 24.5/(24.5+164.0+0.18)=0.13 |

| car | 5.1 | e^{5.1}=164.0 | 164.0/(24.5+164.0+0.18)=0.87 |

| frog | -1.7 | e^{-1.7}=0.18 | 0.18/(24.5+164.0+0.18)=0.00 |

4. Compute loss with "-log(prob)"

L_cat = -log(0.13) = 0.89

Question of cross-entropy loss

Q1: What is the min/max possible loss L_i?

0~infinity

Q2: Usually at initialization W is small so all s approximates to 0. What is the loss?

log(# of classes)

Difference between SVM and Softmax

Q. Suppose I take a datapoint and jiggle a bit. What happens to the loss in both cases?

A. In Hinge loss, if there is a greater than 1, there is no change.

In cross-entropy loss, it continues to push the probability towards 1.

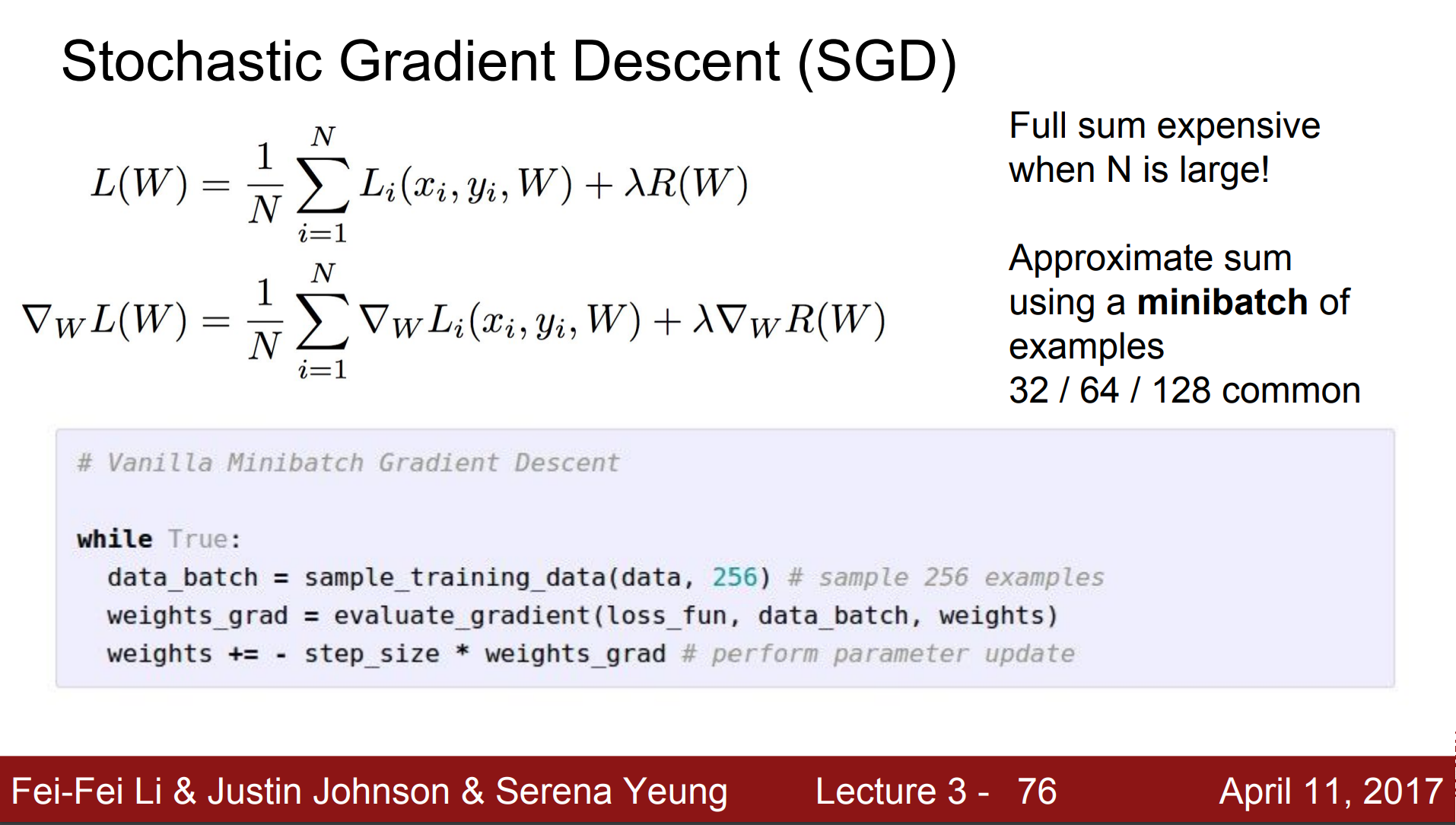

Optimization

Q. Then, how do we find the best W with the loss? Optimization!

1. Random Search - Bad idea... bad accuracy...

2. Follow the slope : Gradient descent

How to?

But...

Reference

https://www.youtube.com/watch?v=h7iBpEHGVNc&list=PL3FW7Lu3i5JvHM8ljYj-zLfQRF3EO8sYv

'Computer Science and Engineering > Computer Vision' 카테고리의 다른 글

| CS231n Lecture 05. Convolutional Neural Networks (0) | 2025.03.19 |

|---|---|

| CS231n Lecture 04. Neural Networks and Backpropagation (1) | 2024.01.24 |

| CS231n Lecture 02. Image Classification (0) | 2024.01.05 |

| CS231n Lecture 01. Introduction (0) | 2024.01.03 |